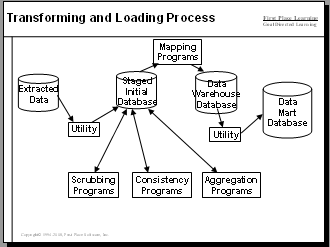

| Stocking the data warehouse with data is often the most time consuming task needed to make data warehousing and business intelligence a success. In the overall scheme of things Extract-Transform-Load (ETL) often requires about 70 percent of the total effort. Extracting data for the data warehouse includes: Making ETL Architecture Choices Data Mapping Extracting data to staging area Applying data cleansing transformations Applying data consistency transformations Loading data Before starting the ETL step for the data warehousing and business intelligence project it is important to determine the business requirements. See the articleRequirements for Data Warehousing and Business Intelligence for more information. Also, the data sources and targets must be defined. See articles Data Sources for Data Warehousing and Business Intelligence and Data Models for Data Warehousing and Business Intelligence to understand this. |

Making ETL Architecture Choices for the Data Warehouse

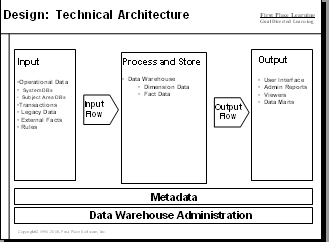

ETL has a prominent place in data warehousing and business intelligence architecture.

Selecting the right ETL Tools is critical to the success the data warehousing and business intelligence project. Should your company acquire a top of the line specialized ETL tool suite, use lower cost Open Source ETL, or use "Tools at Hand"? The article ETL Tool Selection for the Data Warehouse describes these options along with their pros and cons.

Consider these performance improvement methods:

- Turn off database logging to avoid the overhead of log insertions

- Load using a bulk load utility which does not log

- Primary keys should be single integers

- Drop relational integrity (RI) / foreign keys - restore after load is complete

- Drop indexes and re-build after load

- Partition data leaving data loaded earlier unchanged

- Load changed data only - use "delta" processing

- Avoid SQL Update with logging overhead - possibly drop rows and reload using bulk loader

- Do a small number of updates with SQL Update, then use bulk load for inserts

- Use Cyclic Redundancy Checksum (CRC) to detect changes in data rather than brute force method of comparing each column

- Divide SQL Updates into groups to avoid a big rollback log being create.

- Use an ETL tool that supports parallelism

- Use an ETL tool that supports caching

- Use RAID technologies

- Use fast disk and controllers - 15,000 RPM

- Dedicate servers and disk to business intelligence - do not share with other applications

- Use multiple servers to support BI such as: a database server, an analysis server and a reporting server

- Use a server with large main memory (16 GB +) - this increases data caching and reduces physical data access

- Use a server with multiple processors / cores to enable greater parallelism

Data Mapping for Data Warehousing and Business Intelligence

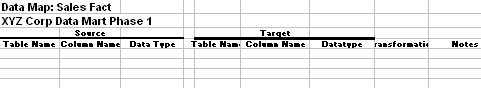

A Data Map is specification that identifies data sources and targets as well as the mapping between them. The Data Map specification is created and reviewed with input by business Subject Material Experts (SMEs) who understand the data.There are two levels of mapping, entity level and attribute level. Each target entity (table) will have a high level mapping description and will be supported by a detailed attribute level mapping specification.

| Target Table Name | dw_customer |

| Target Table Description | High level information about a customer such as name, customer type and customer status. |

| Source Table Names | dwprod1.dwstage.crm_cust dwprod1.dwstage.ord_cust |

| Join Rules | crm_cust.custid = ord_cust.cust.cust_nbr |

| Filter Criteria | crm_cust.cust_type not = 7 |

| Additional Logic | N/A |

- Source: table name, column name, datatype

- Target: table name, column name, datatype

- Transformation Rule

- Notes

Transformations may include:

- Aggregate

- Substring

- Concatenate

- Breakout Array Values / Buckets

1 comments:

its really very nice

sreekanth( www.train4job.com)

Post a Comment